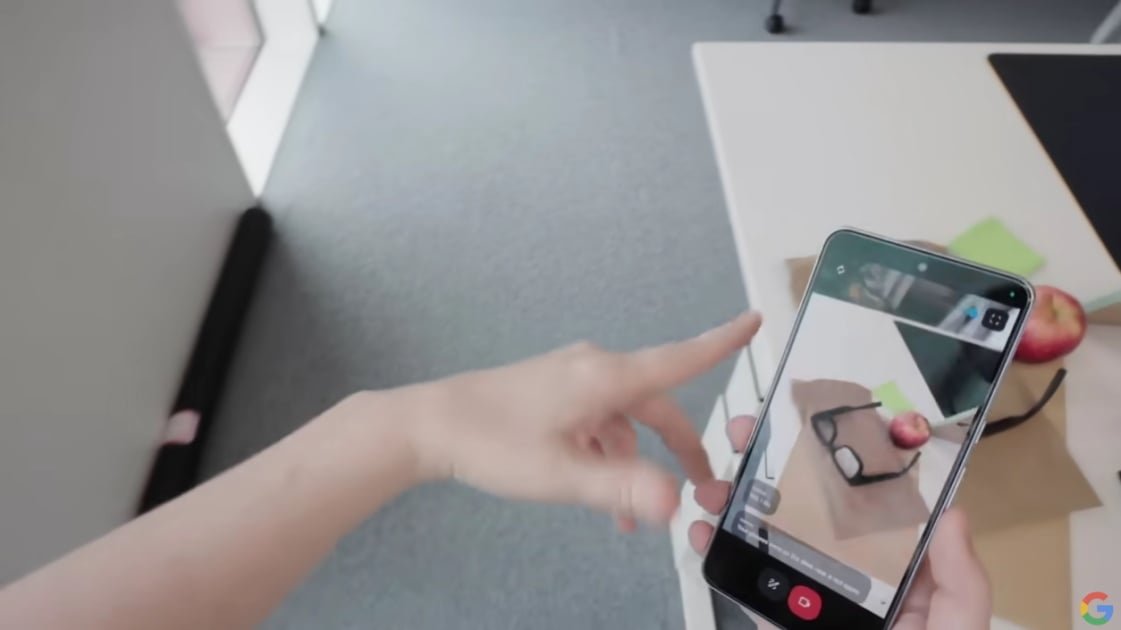

Google I/O had plenty of news to cover last week, but one tiny detail was overlooked by most, (save for The Verge): prototype AR glasses.

During a demo of Project Astra, a Google employee uses the AI agent to find her glasses and put them on. The phone view disappears and seems to be replaced by an AR view through the glasses themselves, with a display.

This isn’t a lot to go on, and it’s surprising considering Google canceled its Project Iris glasses last year. The company hasn’t announced any smart glasses, but the clip offers enough to let us make some strong guesses about how they work. In fact, because I cover AR glasses and mixed reality headsets, I happen to have a pair that seems to be very, very similar to the ones in Google’s demo.

TCL RayNeo X2 vs. Google’s Mystery Smart Glasses

TCL RayNeo X2 (Credit: Will Greenwald)

The TCL RayNeo X2 are AR smart glasses I’ve been waiting to finish reviewing due to software issues. It has a display built into the lenses and speakers and a camera built into the frames, plus microphones and motion sensors. It wirelessly connects to a phone through its own app, has an AI voice assistant, and can use its sensors and camera and the phone’s GPS to give information based on where you are, where you’re facing, and what it sees.

While the X2’s concept of providing a display in front of your eyes is similar to the Editors’ Choice Viture Pro XR and Rokid Max, it’s otherwise very different. It connects wirelessly to a phone while the Viture and Rokid glasses use DisplayPort-over-USB-C. The X2 also has its own interface powered by a Qualcomm Snapdragon XR2 Gen 1 chip instead of relying entirely on the video output and processing of the connected device.

The X2’s AI assistant and most apps are handled primarily by the glasses themselves and mostly use the phone for an internet connection and edge processing. Its camera and ability to identify where you are and what you’re looking at enable far more augmented reality features than the wired smart glasses—which are mostly personal displays designed to be used in a stationary position, with some limited AR features using their 3-degrees-of-freedom (3DOF) motion sensors when working with some devices.

What made me think of the X2 during the Google Demo were the flat lenses. The video smart glasses I’ve tested so far use fairly bulky lens and projector arrangements with an angled prism that directs a downward-facing projection into the eye. They have lenses on the front, but they’re used for privacy and protection, not the projected picture.

The X2 uses a single flat lens for each eye with a waveguide etched inside it. Light is sent directly through the lens and bounces off the rectangular waveguide area, forming a picture. Because there’s only one layer of transparent material between your eyes and the outside world (two if you get prescription lens inserts), you can look straight through the glasses and see your surroundings easily even as you look at whatever the glasses themselves are showing you.

This makes the X2 much more feasible to use safely when walking around than the tethered, angled-lens smart glasses, because the multiple layers eat up a lot of light and make your surroundings look significantly dimmer.

Display on the X2 glasses (Credit: Will Greenwald)

Hardware-wise, Google’s prototype looks very similar to the X2: flat lenses, wireless connection, and Google-powered (Snapdragon is primarily an Android hardware platform). Even the overlaid interface in the demo video has a minimalist configuration with text and a visual indicator that a voice assistant is listening. (This was almost certainly edited in after the fact; it’s extremely hard to capture any kind of clear view of smart glasses displays).

The X2 is probably close to what Google is working on, though with RayNeo software driving most of its features rather than Google’s own, and without any additional Google tweaks or upgrades. These aren’t the same smart glasses but they’re in the same neighborhood.

On paper, the X2 is a superior AR device to the Viture XR Pro, Rokid Max, and other tethered smart glasses. It’s wireless and has far more AR features, including its own AI assistant. Its display means you can actually see where you’re going instead of just the rest of the coffee shop you’re safely sitting in while trying to work.

That isn’t the case in execution, though. I’m not going to give the X2 a full review or a score just yet, but there are a lot of aspects that make this type of AR glasses feel far less polished and usable than the other kind. Those aspects are probably why Google hasn’t announced a hardware prototype yet and might be why it canceled Project Iris.

Google being cute during its Project Astra demonstration. (Credit: Google)

First, there’s the display. The flat lens means you can easily see what’s in front of you, but it also means you can see much less what the glasses want to show you. This waveguide design offers a much smaller field of view than the angled-lens smart glasses I’ve reviewed before. The X2 has a 25-degree field of view, while the Viture XR Pro and Rokid Max, respectively, have 46- and 50-degree fields of view.

That means the X2’s active picture is confined to a pretty small rectangle directly in front of your vision, and any visual indicator based on your location and orientation will simply disappear when you turn your head a bit, vanishing as soon as it touches the edge of that rectangle.

Recommended by Our Editors

The other smart glasses don’t have full views like VR headsets and the Apple Vision Pro, and their more limited AR features also suffer from simply popping out of existence long before they reach the edge of your view. But they can at least project a much bigger picture, similar to sitting a comfortable distance away from a big-screen TV. The X2’s viewing area is about what you’d get holding your phone 2 feet from your face. One of the big reasons Google Glass failed (besides the price, privacy issues, and looking stupid) was its tiny field of view; you could barely see or read anything on it.

Second, there’s the phone connection. The X2 runs its own apps and a good chunk of its AI assistant on its own hardware, but it needs to stay connected to your phone to actually do anything since it’s so reliant on an internet connection for actually getting the information you’re looking for. The connection between the glasses and my test phone (a Google Pixel 8) regularly dropped, and the app that handled the connection stopped responding during testing. That’s one of the main problems I’ve been waiting on some firmware/software updates to fix before finishing my write-up. Without an active connection, the X2 is basically useless.

The X2’s software is also pretty rough, but that’s something Google can completely replace, and build a semi-standard AR platform for other devices like it in the process. Google can also probably smooth out wireless connectivity issues, at least with future Pixel phones.

Snapdragon XR2 Gen 1 is Qualcomm’s hardware platform for AR, and like other Snapdragon systems-on-chips, its connection features are built-in along with the AR processing. Qualcomm has already released the more advanced Snapdragon XR2 Gen 2, and Google would probably be first in line for the reference hardware for any XR2 Gen 3, and each step up can potentially stabilize the link between the glasses and the phone. Google could even get a bit proprietary and add its own silicon to the mix for a Pixel-specific enhancement as Apple does with its H2 chip for connecting AirPods to iPhones.

Google doesn’t want another Google Glass. (Credit: Google)

Improving the display is another story. The waveguide design is a good start, but every type of glasses-based AR device I’ve used (outside of full headsets I wouldn’t want to walk around in under any circumstances) has thrown me off with its limited field of view. I love information projected on top of whatever I’m looking at, but when it cuts off as soon as it leaves the direct center of my sight, it’s incredibly jarring and makes the experience unpleasant.

Google doesn’t seem to be developing its own version of that component and will have to rely on other manufacturers to improve it. Further miniaturizing the electronics and enlarging the display will take some time, and this particular technology still feels a good two or three generations away from really being compelling to consumers.

Google’s AR glasses approach might be completely different from what we’ve been shown, and it’s just as likely that the glasses shown in the Project Astra demo are secondary hardware intended just to give an idea of how the AR features and AI agent can work together on future third-party devices. Still, Project Iris might get some new life, become a new full prototype, and perhaps in a few years we’ll see Pixel Glasses. For now, though, we can keep an eye on AR smart glasses and see how this still-maturing device category develops.

Get Our Best Stories!

Sign up for What’s New Now to get our top stories delivered to your inbox every morning.

This newsletter may contain advertising, deals, or affiliate links. Subscribing to a newsletter indicates your consent to our Terms of Use and Privacy Policy. You may unsubscribe from the newsletters at any time.